From memory the "80% rule" came about mainly due to people using "no name" power supplies. The output rating on them was always a bit suspect so better to run them at less than full rated power. It has no bearing on the efficiency of the power supply which is about conversion losses; more power (Watts) being drawn from the mains input than is delivered to the low voltage output.

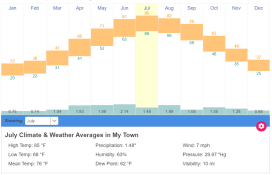

Proper power supplies will also have a "derating table" which shows how the maximum available output power reduces with varying conditions. The main one being increased temperature. If you're in a cool climate there should be no reason you can't run a good branded power supply at it's full rated power. High temperature operation caused by high ambient temperature and / or poor air flow may reduce the maximum safe power output to as low as 50% of the rated maximum.

Proper power supplies will also have a "derating table" which shows how the maximum available output power reduces with varying conditions. The main one being increased temperature. If you're in a cool climate there should be no reason you can't run a good branded power supply at it's full rated power. High temperature operation caused by high ambient temperature and / or poor air flow may reduce the maximum safe power output to as low as 50% of the rated maximum.