nutz4lights

Full time elf

I am pretty decent in regards to electrical stuff... so I am a bit stumped by where I sit tonight...

I posted in another thread about my first experience with 12V pixel strings, not strips. After finally getting them working with my P12R, I decided to measure the DC current running through the string with white illumination, which is the maximum current setting. I put my meter inline such as:

12V output from P12R --> meter red --> meter black out --> 12V input to string

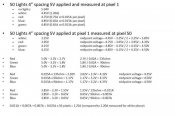

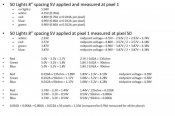

I measured 3.80A for 93 lights which is 41mA (again, these are the 12V pixels, not strip). That is 14mA per color in R-G-B assuming that they all draw equally from the IC.

So I decided to try the same thing with a comparable square pixel 5V light string. I ran the same setup (although switching to my 5V power supply) for measuring as I did above. I measured 1.2A for a 50 count string using white illumination, which is 24mA and that is where I am lost.

Something must be wrong right? That is only 0.12W per bulb and these are supposed to be 0.3W aren't they?

This is all very important to me because I am finalizing designs for my display for the year and the power is obviously a huge part of deciding which way things will go... For instance: The P12R can handle up to 30A per bank of six outputs... that is up to 5A per bank. I was planning on running 100 pixels per output on all the outputs for a few different design elements (pixel megatree, pixel twig trees ,and pixel palm tree wrap) and with 0.3W x 100 x six = 36A at 5V and that means running power separate from the P12R... whereas... if the 100 pixel outputs really only draw 2.4A based on my measurements above... that is only 14.4A per bank on the P12R and I can run the power integrated with the P12R instead of using separate power supplies and cabling...

Thanks for reading... I look forward to the comments and discussion...

I posted in another thread about my first experience with 12V pixel strings, not strips. After finally getting them working with my P12R, I decided to measure the DC current running through the string with white illumination, which is the maximum current setting. I put my meter inline such as:

12V output from P12R --> meter red --> meter black out --> 12V input to string

I measured 3.80A for 93 lights which is 41mA (again, these are the 12V pixels, not strip). That is 14mA per color in R-G-B assuming that they all draw equally from the IC.

So I decided to try the same thing with a comparable square pixel 5V light string. I ran the same setup (although switching to my 5V power supply) for measuring as I did above. I measured 1.2A for a 50 count string using white illumination, which is 24mA and that is where I am lost.

Something must be wrong right? That is only 0.12W per bulb and these are supposed to be 0.3W aren't they?

This is all very important to me because I am finalizing designs for my display for the year and the power is obviously a huge part of deciding which way things will go... For instance: The P12R can handle up to 30A per bank of six outputs... that is up to 5A per bank. I was planning on running 100 pixels per output on all the outputs for a few different design elements (pixel megatree, pixel twig trees ,and pixel palm tree wrap) and with 0.3W x 100 x six = 36A at 5V and that means running power separate from the P12R... whereas... if the 100 pixel outputs really only draw 2.4A based on my measurements above... that is only 14.4A per bank on the P12R and I can run the power integrated with the P12R instead of using separate power supplies and cabling...

Thanks for reading... I look forward to the comments and discussion...